Getting to know the data#

Understanding the data is a foundational step in any machine learning project and is crucial for achieving reproducibility in research.

One of the primary reasons why getting to know the data is essential is its direct impact on the reliability and generalizability of the machine learning models. We can identify potential biases, anomalies, or inconsistencies that may affect model performance by exploring the data.

This understanding enables us to apply appropriate preprocessing techniques, such as handling missing values, addressing class imbalances, or feature normalisation, to ensure that the model learns meaningful patterns from the data.

Moreover, gaining insights into the data can guide us as researchers in selecting the most appropriate algorithms and architectures for these tasks. Understanding the distribution and relationships within the data allows us to choose well-suited models to capture complex patterns and make accurate predictions.

This tutorial uses the Palmer Penguins dataset. Data were collected and made available by Dr. Kristen Gorman and the Palmer Station, Antarctica LTER, a member of the Long Term Ecological Research Network.

Let’s dive into some quick exploration of the data!

from pathlib import Path

DATA_FOLDER = Path("..", "..") / "data"

DATA_FILEPATH = DATA_FOLDER / "penguins.csv"

We’ll use the pandas library to load an pre-process the data. It has quite a few convenience functions like loading CSVs or dropping columns.

import pandas as pd

penguins_raw = pd.read_csv(DATA_FILEPATH)

penguins_raw.head()

| studyName | Sample Number | Species | Region | Island | Stage | Individual ID | Clutch Completion | Date Egg | Culmen Length (mm) | Culmen Depth (mm) | Flipper Length (mm) | Body Mass (g) | Sex | Delta 15 N (o/oo) | Delta 13 C (o/oo) | Comments | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | PAL0708 | 1 | Adelie Penguin (Pygoscelis adeliae) | Anvers | Torgersen | Adult, 1 Egg Stage | N1A1 | Yes | 2007-11-11 | 39.1 | 18.7 | 181.0 | 3750.0 | MALE | NaN | NaN | Not enough blood for isotopes. |

| 1 | PAL0708 | 2 | Adelie Penguin (Pygoscelis adeliae) | Anvers | Torgersen | Adult, 1 Egg Stage | N1A2 | Yes | 2007-11-11 | 39.5 | 17.4 | 186.0 | 3800.0 | FEMALE | 8.94956 | -24.69454 | NaN |

| 2 | PAL0708 | 3 | Adelie Penguin (Pygoscelis adeliae) | Anvers | Torgersen | Adult, 1 Egg Stage | N2A1 | Yes | 2007-11-16 | 40.3 | 18.0 | 195.0 | 3250.0 | FEMALE | 8.36821 | -25.33302 | NaN |

| 3 | PAL0708 | 4 | Adelie Penguin (Pygoscelis adeliae) | Anvers | Torgersen | Adult, 1 Egg Stage | N2A2 | Yes | 2007-11-16 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | Adult not sampled. |

| 4 | PAL0708 | 5 | Adelie Penguin (Pygoscelis adeliae) | Anvers | Torgersen | Adult, 1 Egg Stage | N3A1 | Yes | 2007-11-16 | 36.7 | 19.3 | 193.0 | 3450.0 | FEMALE | 8.76651 | -25.32426 | NaN |

We can see that this dataset has a lot more potential than your usual “toy dataset”. We get the full provenance of this data including the study a penguin was measured in, where it lives, and even comments by the researchers collecting the data!

And honestly, this looks like a lot. Some of these variables might actually leak information, like the location or study number telling us what penguin colony was sampled.

Let’s reduce this to some numerical columns Culmen Length (mm), Culmen Depth (mm), Flipper Length (mm), then Sex as a categorical value in case penguins exhibit sexual dimorphism and the species as our target column to make it easier for us.

num_features = ["Culmen Length (mm)", "Culmen Depth (mm)", "Flipper Length (mm)"]

cat_features = ["Sex"]

features = num_features + cat_features

target = ["Species"]

penguins = penguins_raw[features+target]

penguins

| Culmen Length (mm) | Culmen Depth (mm) | Flipper Length (mm) | Sex | Species | |

|---|---|---|---|---|---|

| 0 | 39.1 | 18.7 | 181.0 | MALE | Adelie Penguin (Pygoscelis adeliae) |

| 1 | 39.5 | 17.4 | 186.0 | FEMALE | Adelie Penguin (Pygoscelis adeliae) |

| 2 | 40.3 | 18.0 | 195.0 | FEMALE | Adelie Penguin (Pygoscelis adeliae) |

| 3 | NaN | NaN | NaN | NaN | Adelie Penguin (Pygoscelis adeliae) |

| 4 | 36.7 | 19.3 | 193.0 | FEMALE | Adelie Penguin (Pygoscelis adeliae) |

| ... | ... | ... | ... | ... | ... |

| 339 | 55.8 | 19.8 | 207.0 | MALE | Chinstrap penguin (Pygoscelis antarctica) |

| 340 | 43.5 | 18.1 | 202.0 | FEMALE | Chinstrap penguin (Pygoscelis antarctica) |

| 341 | 49.6 | 18.2 | 193.0 | MALE | Chinstrap penguin (Pygoscelis antarctica) |

| 342 | 50.8 | 19.0 | 210.0 | MALE | Chinstrap penguin (Pygoscelis antarctica) |

| 343 | 50.2 | 18.7 | 198.0 | FEMALE | Chinstrap penguin (Pygoscelis antarctica) |

344 rows × 5 columns

Much easier to deal with for a non-expert in Penguin studies!

Data Visualization#

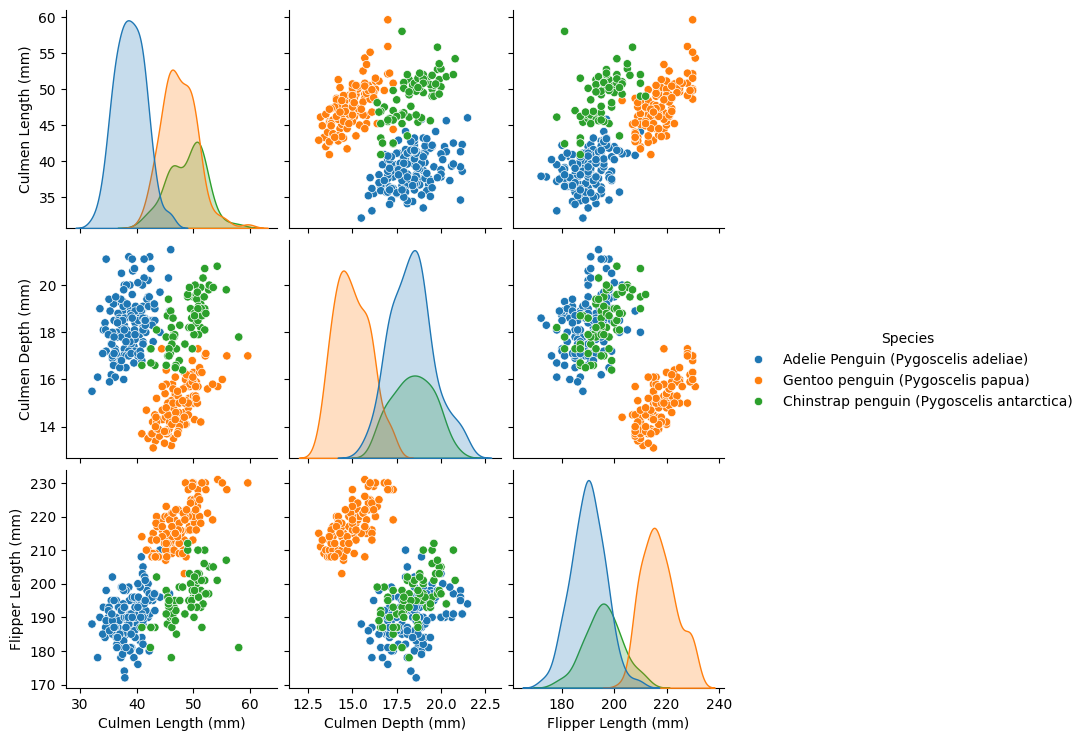

Data visualization is indispensable for effective data exploration in machine learning research.

We can quickly grasp the datasets’ distribution, relationships, and anomalies through visual representations like scatter plots, histograms, and box plots. These visualizations provide invaluable insights into the underlying structure of the data, enabling researchers to identify patterns, trends, and outliers that may influence model performance.

Moreover, visualizations facilitate collaboration and communication among team members, as they provide intuitive ways to convey complex insights and findings.

In essence, data visualization is a cornerstone of data exploration, enhancing the reproducibility and reliability of machine learning research by enabling researchers to gain a deeper understanding of their datasets.

For this visualization we’ll use seaborn which is a statistical plotting library that makes our job much easier than the more granular matplotlib.

import seaborn as sns

pairplot_figure = sns.pairplot(penguins, hue="Species")

Looks like we’re getting some good separation of the clusters already!

This promises to be a good dataset for fairly simple machine learning algorithms.

Data cleaning#

That means we can probably do some cleaning and get ready to build some good machine learning models.

Dropping NaNs, or missing values, during the data cleaning process is the most basic form of cleaning data. Some machine learning algorithms can actually process NaNs, which is a great way to deal with missing data, but in our case we’re choosing the simplest way. Don’t take this as the best practice though!

By removing rows or columns containing NaNs, researchers can prevent these issues and ensure that their models learn from complete and consistent data. While imputation techniques exist to fill in missing values, dropping NaNs is often preferred when the proportion of missing values is small relative to the dataset size or when imputation could introduce inaccuracies.

Sometimes we can even gain information from NaNs, when they’re not “missing at random”. Then we can actually pre-process the data by adding a boolean feature that encodes the “missingness” of a variable and use mean imputation on the data column.

penguins = penguins.dropna(axis='rows')

penguins

| Culmen Length (mm) | Culmen Depth (mm) | Flipper Length (mm) | Sex | Species | |

|---|---|---|---|---|---|

| 0 | 39.1 | 18.7 | 181.0 | MALE | Adelie Penguin (Pygoscelis adeliae) |

| 1 | 39.5 | 17.4 | 186.0 | FEMALE | Adelie Penguin (Pygoscelis adeliae) |

| 2 | 40.3 | 18.0 | 195.0 | FEMALE | Adelie Penguin (Pygoscelis adeliae) |

| 4 | 36.7 | 19.3 | 193.0 | FEMALE | Adelie Penguin (Pygoscelis adeliae) |

| 5 | 39.3 | 20.6 | 190.0 | MALE | Adelie Penguin (Pygoscelis adeliae) |

| ... | ... | ... | ... | ... | ... |

| 339 | 55.8 | 19.8 | 207.0 | MALE | Chinstrap penguin (Pygoscelis antarctica) |

| 340 | 43.5 | 18.1 | 202.0 | FEMALE | Chinstrap penguin (Pygoscelis antarctica) |

| 341 | 49.6 | 18.2 | 193.0 | MALE | Chinstrap penguin (Pygoscelis antarctica) |

| 342 | 50.8 | 19.0 | 210.0 | MALE | Chinstrap penguin (Pygoscelis antarctica) |

| 343 | 50.2 | 18.7 | 198.0 | FEMALE | Chinstrap penguin (Pygoscelis antarctica) |

334 rows × 5 columns

DATA_CLEAN_FILEPATH = DATA_FOLDER / "penguins_clean.csv"

penguins.to_csv(DATA_CLEAN_FILEPATH, index=False)

Not too bad it looks like we lost ten rows. That’s manageable, it’s a toy dataset after all.

So let’s build a small model to classify penguins!

Machine Learning#

First, it’s essential to split the data into separate sets for training and testing purposes.

This split enables us to evaluate the performance of our machine learning model effectively. By training the model on a portion of the data and testing it on unseen data, we can assess whether the model has learned generalizable patterns or has simply memorized the training examples.

When a model that fails to generalize to unseen data this is known as overfitting. Overfitting is a phenomenon where the model performs well on the training data but fails on non-training data. Overfitting leads to poor performance and unreliable predictions in real-world scenarios.

Therefore, by splitting the data, we can detect and mitigate overfitting, ensuring that our model learns meaningful patterns that generalize to unseen data.

We’ll choose 70% of the data as training data here:

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(penguins[features], penguins[target], train_size=.7)

X_train

| Culmen Length (mm) | Culmen Depth (mm) | Flipper Length (mm) | Sex | |

|---|---|---|---|---|

| 189 | 44.4 | 17.3 | 219.0 | MALE |

| 137 | 40.2 | 20.1 | 200.0 | MALE |

| 38 | 37.6 | 19.3 | 181.0 | FEMALE |

| 22 | 35.9 | 19.2 | 189.0 | FEMALE |

| 267 | 55.1 | 16.0 | 230.0 | MALE |

| ... | ... | ... | ... | ... |

| 148 | 36.0 | 17.8 | 195.0 | FEMALE |

| 231 | 45.2 | 16.4 | 223.0 | MALE |

| 140 | 40.2 | 17.1 | 193.0 | FEMALE |

| 319 | 45.5 | 17.0 | 196.0 | FEMALE |

| 287 | 51.7 | 20.3 | 194.0 | MALE |

233 rows × 4 columns

y_train

| Species | |

|---|---|

| 189 | Gentoo penguin (Pygoscelis papua) |

| 137 | Adelie Penguin (Pygoscelis adeliae) |

| 38 | Adelie Penguin (Pygoscelis adeliae) |

| 22 | Adelie Penguin (Pygoscelis adeliae) |

| 267 | Gentoo penguin (Pygoscelis papua) |

| ... | ... |

| 148 | Adelie Penguin (Pygoscelis adeliae) |

| 231 | Gentoo penguin (Pygoscelis papua) |

| 140 | Adelie Penguin (Pygoscelis adeliae) |

| 319 | Chinstrap penguin (Pygoscelis antarctica) |

| 287 | Chinstrap penguin (Pygoscelis antarctica) |

233 rows × 1 columns

Now we can build a machine learning model.

Here we’ll use the scikit-learn pipeline model. This makes it really easy for us to train prepocessors and models on the training data alone and cleanly apply to the test data set without leakage.

Pre-processing#

Pre-processing data is the next critical step in preparing it for machine learning models, and the scikit-learn library offers powerful tools like StandardScaler and OneHotEncoder to facilitate this process.

The StandardScaler is commonly used to standardize features by removing the mean and scaling them to unit variance, ensuring that each feature contributes equally to the model’s performance. This transformation is particularly beneficial for algorithms sensitive to feature scaling, such as support vector machines and k-nearest neighbours.

On the other hand, OneHotEncoder is instrumental in handling categorical variables by converting them into a binary representation, where each category becomes a binary feature. This transformation prevents the model from interpreting categorical variables as ordinal, e.g. category 1 is “stronger” than category 0, and ensures that all categories are treated equally.

Together, these pre-processing techniques help enhance the performance and robustness of machine learning models, ready to be fed into the model for training and evaluation.

Statistically and scientifically valid results come from proper treatment of our data. Unfortunately, we can overfit manually if we don't split out a test set before pre-processing.

from sklearn.preprocessing import StandardScaler, OneHotEncoder

num_transformer = StandardScaler()

cat_transformer = OneHotEncoder(handle_unknown='ignore')

The ColumnTransformer is a neat tool that can apply your preprocessing steps to the right columns in your dataset.

This transformer is pretty efficient because it allows us to target individual subsets of data for transformation, ensuring that preprocessing techniques are applied only where necessary.

Moreover, leveraging a Pipeline instead of a standalone StandardScaler or ColumnTransformer offers even greater flexibility, enabling the integration of more intricate preprocessing workflows, which we’ll see further below. This versatility is particularly valuable when working on complex machine learning tasks that demand comprehensive preprocessing strategies beyond the scope of basic projects, enabling researchers to unleash the full potential of their data.

from sklearn.compose import ColumnTransformer

preprocessor = ColumnTransformer(transformers=[

('num', num_transformer, num_features),

('cat', cat_transformer, cat_features)

])

Ok now we’ll build a reproducible Pipeline including a support-vector machine as the classifier!

from sklearn.pipeline import Pipeline

from sklearn.svm import SVC

model = Pipeline(steps=[

('preprocessor', preprocessor),

('classifier', SVC()),

])

model

Pipeline(steps=[('preprocessor',

ColumnTransformer(transformers=[('num', StandardScaler(),

['Culmen Length (mm)',

'Culmen Depth (mm)',

'Flipper Length (mm)']),

('cat',

OneHotEncoder(handle_unknown='ignore'),

['Sex'])])),

('classifier', SVC())])In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook. On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

Pipeline(steps=[('preprocessor',

ColumnTransformer(transformers=[('num', StandardScaler(),

['Culmen Length (mm)',

'Culmen Depth (mm)',

'Flipper Length (mm)']),

('cat',

OneHotEncoder(handle_unknown='ignore'),

['Sex'])])),

('classifier', SVC())])ColumnTransformer(transformers=[('num', StandardScaler(),

['Culmen Length (mm)', 'Culmen Depth (mm)',

'Flipper Length (mm)']),

('cat', OneHotEncoder(handle_unknown='ignore'),

['Sex'])])['Culmen Length (mm)', 'Culmen Depth (mm)', 'Flipper Length (mm)']

StandardScaler()

['Sex']

OneHotEncoder(handle_unknown='ignore')

SVC()

We can see a nice model representation here.

You can click on the different modules that will tell you which arguments were passed into the pipeline. In our case, how we handle unknown values in the OneHotEncoder.

The Pipeline offers a convenient solution to prevent unintentional leakage of information from test data during preprocessing. By encapsulating preprocessing steps within a pipeline, each transformation is applied sequentially and independently to the data, ensuring that normalizers and other preprocessing techniques are fitted solely on the training data.

This prevents the inadvertent fitting of preprocessors to the test data, mitigating the risk of information leakage and preserving the integrity of the evaluation process. One way to avoid accidents in our research!

Model Training#

Now it’s time to fit our Support-Vector Machine to our training data.

This process involves training the model on the provided training data to learn patterns and relationships within the features and their corresponding labels. During the fitting process, the model adjusts its parameters to minimize the difference between its predictions and the actual target values.

The .fit() method encapsulates this training process, allowing the model to learn from the training data and prepare for subsequent evaluation and prediction tasks.

(And it’s all in the Pipeline, which makes sure our pre-processors get fitted to the same exact data!)

model.fit(X_train, y_train[target[0]])

Pipeline(steps=[('preprocessor',

ColumnTransformer(transformers=[('num', StandardScaler(),

['Culmen Length (mm)',

'Culmen Depth (mm)',

'Flipper Length (mm)']),

('cat',

OneHotEncoder(handle_unknown='ignore'),

['Sex'])])),

('classifier', SVC())])In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook. On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

Pipeline(steps=[('preprocessor',

ColumnTransformer(transformers=[('num', StandardScaler(),

['Culmen Length (mm)',

'Culmen Depth (mm)',

'Flipper Length (mm)']),

('cat',

OneHotEncoder(handle_unknown='ignore'),

['Sex'])])),

('classifier', SVC())])ColumnTransformer(transformers=[('num', StandardScaler(),

['Culmen Length (mm)', 'Culmen Depth (mm)',

'Flipper Length (mm)']),

('cat', OneHotEncoder(handle_unknown='ignore'),

['Sex'])])['Culmen Length (mm)', 'Culmen Depth (mm)', 'Flipper Length (mm)']

StandardScaler()

['Sex']

OneHotEncoder(handle_unknown='ignore')

SVC()

We can see that we get a decent score on the training data.

This metric only tells us how well the model can perform on the data it has seen, we don’t know anything about generalization and actual “learning” yet.

model.score(X_train, y_train)

0.9957081545064378

To evaluate how well our model learned, we check the model against the test data one final time.

This invalidates scientific results and must be avoided. If you need to manually tweak the model, use a three-way `train-val-test` split.

Only evaluate on the test set once!

model.score(X_test, y_test)

1.0

That’s an extraordinary score!

We can predict our penguins with 100%, so let’s evaluate these models in the following pages!